The µONOS SDN platform is the next generation architecture of ONOS and has evolved from our experience building and deploying ONOS or ONOS-classic, which has been a leader in the open source SDN control plane space.

The principal goal for this project, which is still under way, is to update the architectural approach of the SDN controller along with its founding technologies. On the architectural front, the project is centered on building its components as micro-services that use gRPC APIs to communicate with other components and in turn expose their own interfaces. This approach allows far more flexibility in how a control plane is deployed and scaled over what ONOS-classic allows. Furthermore, the deployments can be managed using industry standard tools such as Kubernetes, Helm, Rancher, etc.

On the network control side, the principal control abstractions are centered on P4Runtime and gNMI, superseding OpenFlow as the focal point. This provides applications with far greater control and allows native interactions with programmable switches, IPUs, NICs, etc., with control responsibilities over different parts of the pipeline being divided among different applications.

The µONOS effort started approximately 3 years ago and was initially focused on setting up the initial foundation, which included the gRPC API (onos-api), general purpose NIB (onos-topo) and a consolidated kubectl-like CLI (onos-cli). A few projects were then built atop this simple foundation. One of them is onos-config, a gNMI configuration subsystem for managing configurations of multitudes of devices and software entities. This project can effectively be thought of as an extension to the µONOS platform itself. Another project is SD-RAN, which aims to provide SDN control for RAN edge, and which involves a number of components that adhere to the general principles of the O-RAN architecture, its E2 control protocol, and its various E2 Service Models.

More recently, however, focus started to return to the SD-Fabric use case, which falls into the more classic SDN realm, and which brings with it several new µONOS platform components. This is what we would like to update the ONOS community on.

The µONOS for SD-Fabric project consists of a suite of components and libraries - following the established µONOS patterns – aimed to replace ONOS-classic for programming the data plane forwarding behaviors. The SD-Fabric use-case is the proximate driving force guiding the decisions and priorities for this project and any associated design decisions. However, nearly all of the components should ultimately become usable in a more general context.

Scaling, Performance & Resilience

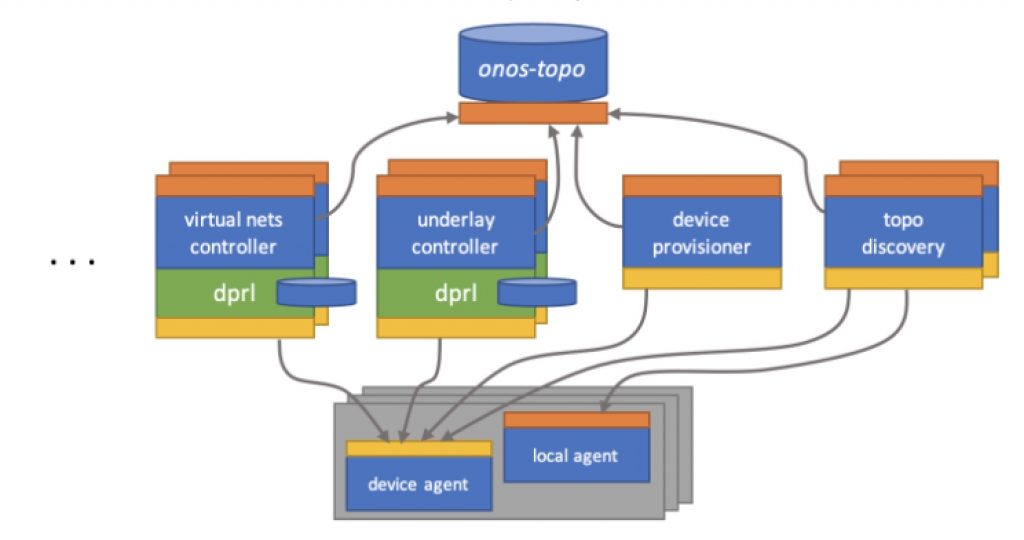

The platform architecture aims to scale at minimum, to 1.1K devices (100 switches, 1K IPUs) and ~20K hosts and VMs and higher. The intent is to accomplish this goal using the platform’s capability for horizontal scaling to distribute the control I/O load and to reduce the “blast radius” of a component failure. At such scales, the control domain is to be subdivided into non-overlapping realms, such as pods of racks or individual racks, and each realm be managed by a group of control components and apps dedicated to that realm. All of such control groups are to operate on a common NIB (onos-topo), and thus indirectly federate into a single control domain. The realms are described via label/value pairs that can be attached to each entity or relation in the NIB. Most of the control components discussed below support operating on a specified NIB realm.

Key Platform Components

The following set of components are part of a general platform as they are not inherently specific to the SD-Fabric solution or any other use-case. All communicate via gRPC APIs using TLS; option to bypass TLS is also available. The components are available as Docker images and have corresponding Helm charts available under the onos-helm-charts repository.

Persistent NIB

This subsystem offers persistence of network entities using an extensible and elastic Entity, Relation and Kind model via its NB gRPC API to other components and applications. As such, it is suitable for storing and retrieving information about major network assets, e.g. devices, links, controllers, hosts, virtual network entities.

The information, organized as a dynamic graph, can be searched, browsed, and monitored easily by any platform or app components.

For more information see the onos-topo GitHub repository.

Device Provisioner Component

This component is primarily responsible for forwarding pipeline configuration/reconciliation using P4Runtime and device chassis configuration via gNMI.

It exposes NB gRPC API that allows management of different pipeline configuration artifact sets (p4info, architecture-specific binaries, etc.) and chassis configuration artifacts. Support for additional types of configuration blobs and upload protocols can be provided in the future, e.g. gNOI.

This API is split into two parts:

- Service for managing the inventory of pipeline configurations (add/delete/get/etc.)

- Service for monitoring the status of pipeline reconciliation on devices

Mapping of pipeline configurations to devices is to be done separately, via an aspect attached to the device entity in onos-topo.

For more information see the device-provisioner GitHub repository.

Topology Discovery Component

The discovery component is responsible for discovering devices (switches, IPUs) their connectivity via infrastructure links, and connected end-stations (hosts). Discovery of links and hosts will occur indirectly through local agents to distribute the I/O load and to help maintain performance at scale.

For the most part, the component will operate autonomously. However, it will also expose an auxiliary gRPC API to allow coarse-grained control of the discovery mechanism, e.g., trigger all or trigger device port/link discovery.

For more information see the topo-discovery GitHub repository.

Switch Local Discovery Agent

The local agent (LA) components are expected to be deployed on each data-plane device (switch or IPU). At the minimum, they are responsible for local emissions and handling of ARP and LLDP packets for the purpose of host and infrastructure link discovery.

The LA will provide access to the discovered information/state via gNMI and a custom YANG model. Such mechanism should also be provided to allow configuration of the agent host and link discovery behavior.

For more information see the discovery-agent GitHub repository.

Consolidated CLI

All major components have command-line packages under the `onos-cli` repository, creating a consolidated CLI client - similar to what `kubectl` provides for Kubernetes.

For more information and detailed CLI usage documentation, see the onos-cli GitHub repository.

Fabric Simulator

Fabric Simulator provides simulation of a network of switches, IPUs and hosts via P4Runtime, gNMI and gNOI control interfaces. Controllers and applications can interact with the network devices using the above interfaces to learn about the network devices and the topology of the network. Note that, unlike `mininet`, the `fabric-sim` does not actually emulate data-plane traffic. It merely emulates control interactions and mimics certain behaviors of the data-plane. For example, if an LLDP packet-out is emitted by an application via P4Runtime, it will result in an LLDP packet-in received by the application from the neighboring device.

The simulator can be run as a single application or a docker container. This serves as a cost-effective means to stress-test controllers and applications at scale and a light-weight fixture for software engineers during development and testing of their applications.

For more information see the fabric-sim GitHub repository.

Miscellaneous

There are also various library modules such as onos-lib-go and onos-net-lib. These provide facilities used by various platform components and also applicable to custom SDN application components.

Links to key GitHub repositories:

[onos-api]: https://github.com/onosproject/onos-api

[onos-cli]: https://github.com/onosproject/onos-cli

[onos-topo]: https://github.com/onosproject/onos-topo

[device-provisioner]: https://github.com/onosproject/device-provisioner

[topo-discovery]: https://github.com/onosproject/topo-discovery

[discovery-agent]: https://github.com/onosproject/discovery-agent

[fabric-sim]: https://github.com/onosproject/fabric-sim

[onos-helm-charts]: https://github.com/onosproject/onos-helm-charts